Devlog 59 - Music Loading, Automatic Beat Detection

Published: October 26, 2023

It feels like I've been really inconsistent with these devlog entries, but looking back it seems like I have been basically putting out one per month quite regularly, so maybe it's not as haphazard as I thought!

Music Adjustment UI

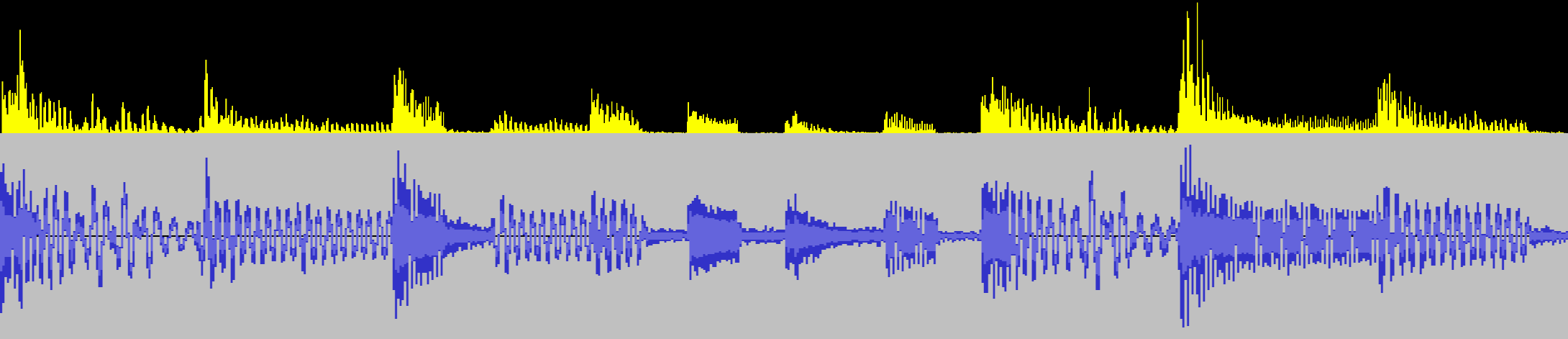

Last week I talked about the waveform display that I implemented using some fancy parallel burst compilation. My main use case for that functionality was to build out a UI for loading a music file and (more importantly) specifying and adjusting the tempo and start time for that file.

After a lot of work, here's what I ended up with:

It's working pretty well! You can preview the song, zoom into the waveform, and drag around the beat markers to adjust the timing to your liking, or simply type in the tempo and start time manually if you already have it on hand. There's also a built-in metronome function so you can verify your work.

This seemingly-simple UI widget really involved a lot of different moving pieces and little quality-of-life touches. There's smooth scrolling and zooming, and I needed to make sure that the beat markers appear and disappear as you scroll through the track. Dragging the beat markers past the end of the current view also makes it scroll automatically, and some of the beat measures fade out if you zoom out far enough (to avoid clutter).

It's worth noting that I took some of the functionality that I developed along the way and added it in other places. For example, since I needed to implement a metronome to give audio feedback on whether your song timings are correct, I also added that as an option to use in-game. I also added the waveform display to the background of the input timeline while editing, to serve as an additional reference:

Automatic Tempo Detection

While the "respawn loop" button does nothing at all yet (that is supposed to be a separate dialog that allows you to provide an optional short audio loop that will play during respawns), the rest of this big devlog post is going to be talking about that other rather inconspicuous button, "Auto-detect".

You might have already guessed it, but clicking this button performs a whole bunch of math and signal processing in order to procedurally analyze the music file and attempt to automatically determine both the tempo and start time of the music file. Here's a short video of that magic in action!

It's definitely not perfect, and it takes a few seconds to churn through all of the calculations (video above has that processing time skipped), but it actually does a pretty good job in a lot of cases! It looks easy since it's just a single magic button, but I ended up diving quite deep into the rabbit hole of audio signal processing and beat/tempo detection algorithms in order to implement this...

Before I start explaining the methodology here, I wanted to point out something that might surprise you a bit. You might think that my goal with this automatic tempo detection is to make it work so well that manually setting the timing data for a song is no longer necessary. That's a nice hope to have, but I'm not really confident I can do that. On the contrary, I actually think for me that it's the other way around: I want the manual beat-setting interface to work so well that the automatic tempo detection is unnecessary! In that sense, you could say that the automatic detection is really just a secondary nice-to-have feature that I could honestly have dropped altogether. But, I'm a perfectionist, and I found the problem interesting, so I dove in...

Resources and References

While Unity provides basic access to audio data (get the samples out of an audio clip as a looooong array of floating point numbers), doing any sort of more involved operations (normalization, convolution, filtering) is something you'll want to use a specialized C# library for (don't reinvent the wheel!). NWaves was by far the most user-friendly and sensible one that I personally found (though I did end up re-implementing particular parts using Unity's job/burst systems, for performance reasons). NWaves was a huge boon for me and let me do things like Short-time Fourier Transforms without having to learn a bunch of new complicated math and attempt to implement it from scratch.

Also, I rarely find myself doing this, but for this particular problem I ended up consulting a whole bunch of research papers that have been written about the topic, some of which were extremely helpful.

"Evaluating the Online Capabilities of Onset Detection Methods" by Böck et al in particular provides a pretty nice survey of various approaches to detecting note onsets -- this is not 100% equivalent to tempo detection but is closely related.

Accurate Tempo Estimation based on Recurrent Neural Networks and Resonating Comb Filters, also by Böck et al, was one of the other more helpful reads.

Detecting a Tempo

The process of tempo detection basically consists of the following steps at a high level:

- Do some preliminary processing on the audio signal to prepare it for further steps

- Run the audio through some sort of detection function(s) that is designed to highlight beats/onsets

- Determine what tempo best lines up with the detected beats

Preliminary Processing

This step is pretty boring, we basically make sure that the audio is normalized, and converted from stereo into mono. I also add some silence to the beginning as a buffer and scale the audio levels a bit (apparently working in a logarithmic scale tends to perform better).

In some approaches the audio is filtered and split into multiple parts -- for example one copy with only low frequencies, another with mid frequencies, and another with higher frequencies. I didn't find this to work super well for me and it also adds additional processing time since each filtered copy needs to be processed separately, so I just stuck with a single unified copy of the audio. But it's worth noting that filtering is a relatively common technique here, and your mileage may vary.

Spectral Analysis

Now we need to take the music track and come up with some way to detect onsets or "strong attacks" in the audio.

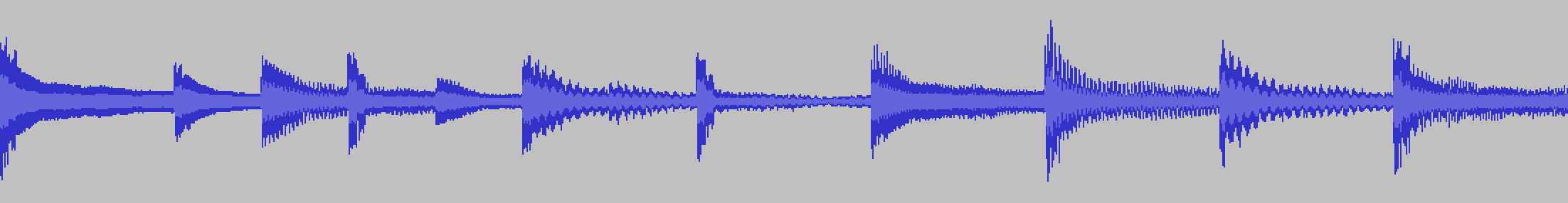

The first thing you might think of is to look at the places in the audio where the volume is loudest. That might work decently well for a single section of music with an isolated instrument that looks like this:

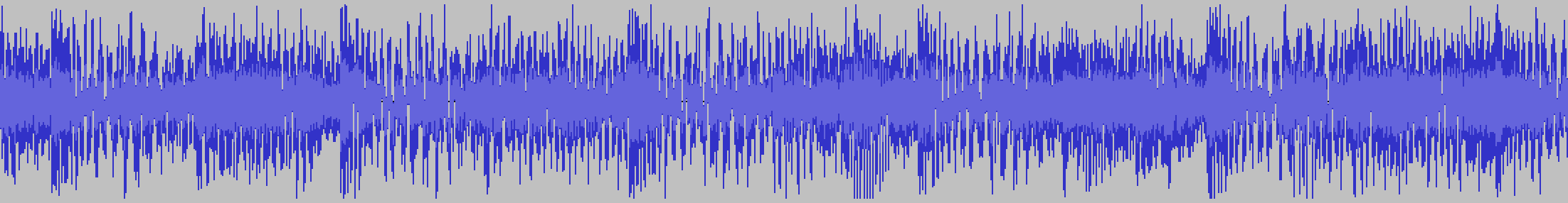

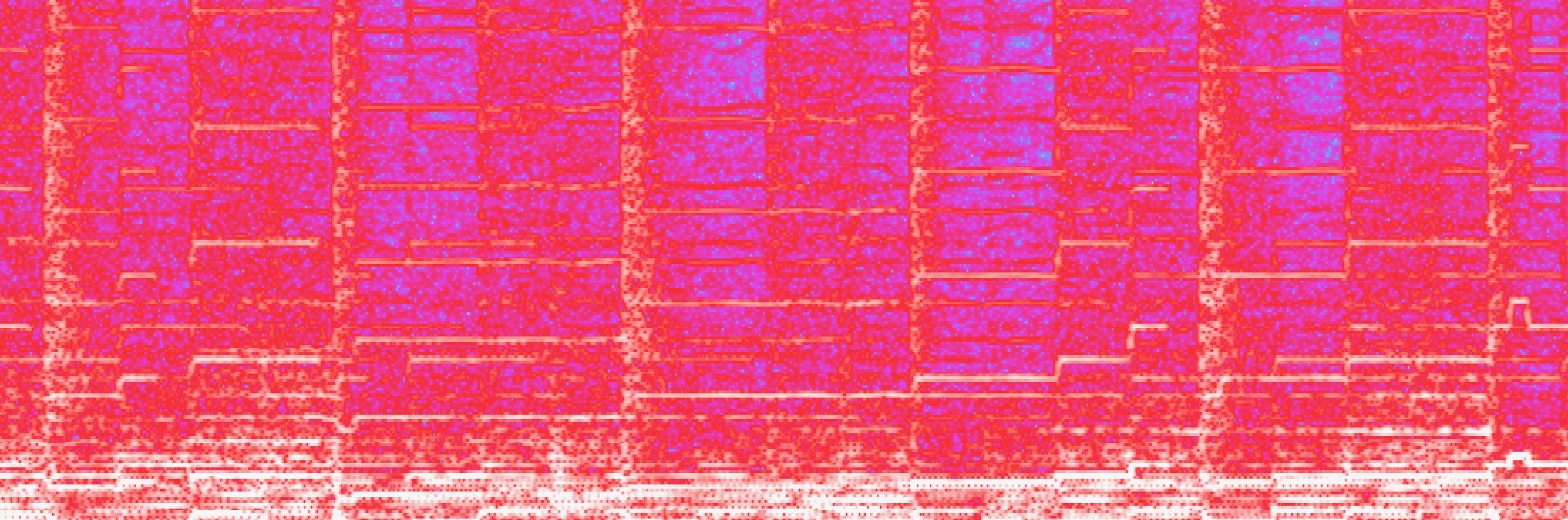

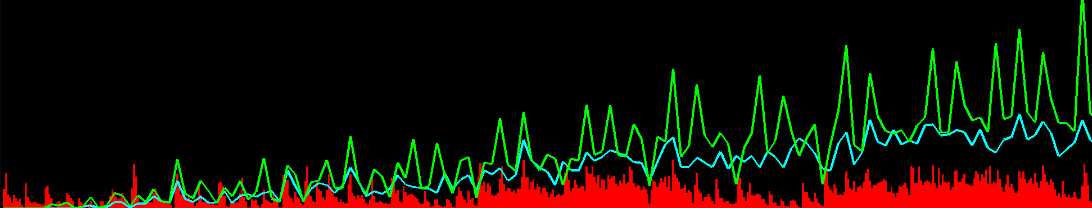

But for a loud song that has many different elements going on at the same time, the waveform looks more like this:

Part of the problem here is that all of the different sound frequencies that make up the song are represented together in a single waveform (one big array of floating point numbers), so it's almost impossible to isolate different musical events.

The Fourier Transform can help us here by converting a single audio signal into a breakdown of the different frequencies that comprise that signal. If you've ever seen any sort of spectrum visualizer like this one, the Fourier Transform is what's being used to evaluate how tall each "bar" in the spectrum is:

Here's the same complex waveform from earlier above, but this time displayed alongside its spectrogram (generated using a form of the Fourier Transform). Notice how you can not only see a vertical line pattern (corresponding to the big kick drum hits), but you can also see distinct horizontal bars that correspond to different notes being played on the lead melody synth.

Onset Detection

NWaves can perform "Short-Time Fourier Transforms" in order to generate the equivalent of the above spectrogram, which is great. However, we still need a programmatic way to get from the spectrogram to some sort of evaluation of where note/beat onsets are.

There are various approaches to doing this. In fact, some of the best results are done using neural network techniques...which unfortunately are a little too far out of my wheelhouse for me to implement.

Instead I went with a simpler (well, kind of) approach, detailed in this paper. I basically take each of the sinusoidal frequencies (that are given by the Fourier Transform) and at each point in time, evaluate the change in energy and phase of that frequency. So if the energy level in a certain frequency goes up suddenly, that's a good indicator of a note starting. Similarly, if the phase of that frequency changes significantly, that's also a indicator of a note starting or changing. I add up all of the "change amounts" for every frequency and end up with a single number for that moment that describes "how much did the frequencies change in total at this moment?"

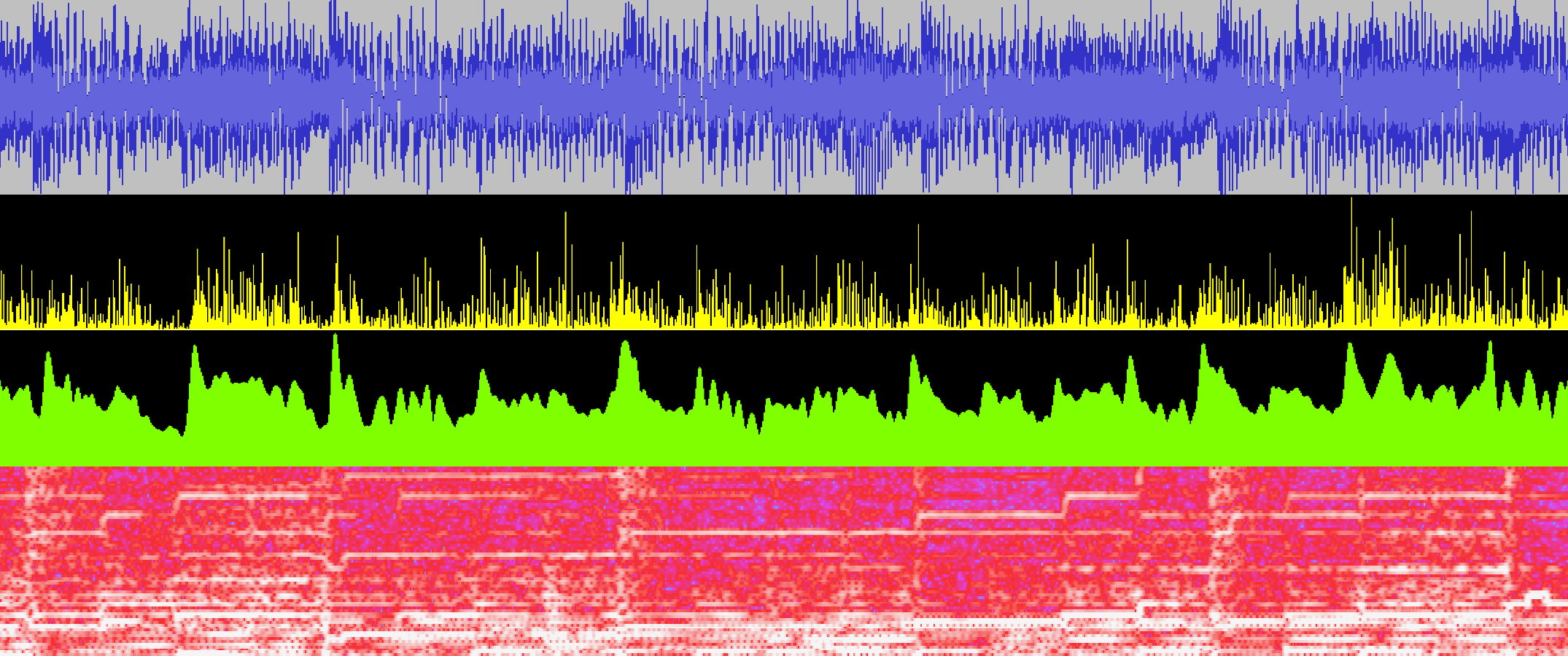

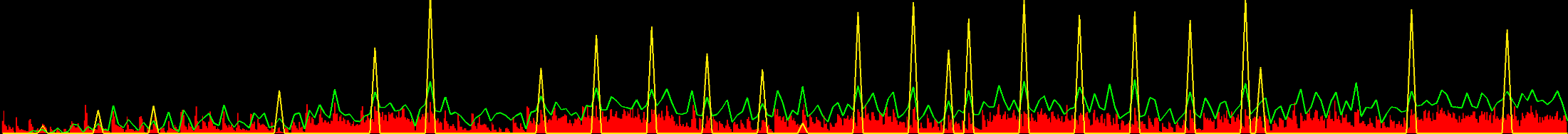

Here's a rough visualization of what that "total change amount" looks like, along with the other signal representations. The yellow spiky line is the raw "total change amount" data that I use for the rest of the computations, the green graph is just a smoothed out version of that to show that it does indeed map onto the beats of the song.

Here's a simpler example where you can see it a little more clearly:

In some approaches, you take this "change amount" and try to run some sort of thresholding to pick out discrete onset/beat events. I chose not to do this and instead leave the signal as a continuous one. As you'll see in the next section, we don't actually need to pick out discrete beats in order to find the tempo. (One advantage of this is that we can also make use of information that lies in between beats.)

Comb Filtering

The next step is to look for regularities in the onsets (the yellow graph) so we can determine the actual tempo. The way I do this is simply to try many possible tempos (all the way from 60 BPM to 180 BPM) and see which one(s) "matches" the graph best.

How do we measure how well a given tempo "matches" the graph? The way I chose (referenced in some of the literature) is to use comb filters. A feedback comb filter is basically a fancy way of saying we are going to put an echo on the signal.

I run the onset graph through many different comb filter delays (echo lengths), corresponding to each candidate tempo. The hope is that if the delay matches the actual tempo of the song, then we end up getting more feedback resonance than if not, so the resulting signal amplitudes will be higher. That ends up being true! In the below graph the blue/cyan line represents the comb filter output for an incorrect tempo, and the green line represents the filter output for a matching tempo.

Both of them start out relatively similar, but you can see that as the resonance kicks in, there's a feedback effect on every beat (since there tends to be note onsets there more often), which causes a higher signal amplitude.

After calculating the comb filter output for all possible tempos, I simply go through and choose the tempo whose comb filter values are highest more than all of the other ones. Sometimes there is more than one different tempo that is higher than the rest -- often times this happens when the song has strong syncopated patterns, so for example instead of 120BPM the detector could also find 160BPM as a valid candidate. Right now I just have it pick the top one, but in the future I could build some sort of UI to suggest multiple tempos when it isn't quite sure.

Detecting Beat Offset

Now that we have our song tempo calculated, the next order of business is to try and figure out what the beat offset is. I'm still working on tweaking this part a little, but what I do right now is take the comb filter output and process it even more using averages and thresholding. I end up with a more discrete selection of peaks:

I use various rules for selecting these peaks -- for example, the signal at that point has to be much higher than its average, it needs to be the highest point in some proximity, and there can't be two peaks too close to each other. Note that this attempted "peak selection" isn't perfect, and usually tosses away some otherwise-relevant information (which is why I didn't do it in the previous step). But as long as I get "enough" of the correct beats, it's fine!

The last step is simply to go through all of the possible beat offset values and see which one of them lines up most with the peaks from this step. I just do this by adding all the on-beat amplitudes that would result from a given beat offset.

It Works!

Amazingly, the entire system works fairly well most of the time! It still has some troubles with certain songs, and often the start time is wrong by half a beat (I'm guessing this is because off-beats tend to be featured prominently as well), but there are always going to be exceptions like that. Again, even when it's wrong, it usually has the correct option as its second or third choice.

After I ironed down the main algorithmic implementation, I ended up doing a pass over most of the computation logic and rewriting it to make use of Unity's parallel job / burst compilation system, which helped speed things up drastically. Right now the algorithm looks at the first 30 seconds of a song, which is over a million floating point samples, so there is quite a lot of data to parse through! Before the optimizations this process was taking over ~10 seconds, but it's now down to just a couple of seconds at most.

I could go on and on trying to fine-tune all of the different parameters in the system (there are a lot...), and I actually found a couple of silly bugs and noticable improvements even while writing this devlog (hooray!). However, it's about time that I call it a wrap on this particular system and get back to making sure that everything else in the editor is well-integrated...

<< Back: Devlog 58 - Level Editor Progress

>> Next: Devlog 60 - Custom Level Browser, Stats/Metadata